What is AP?

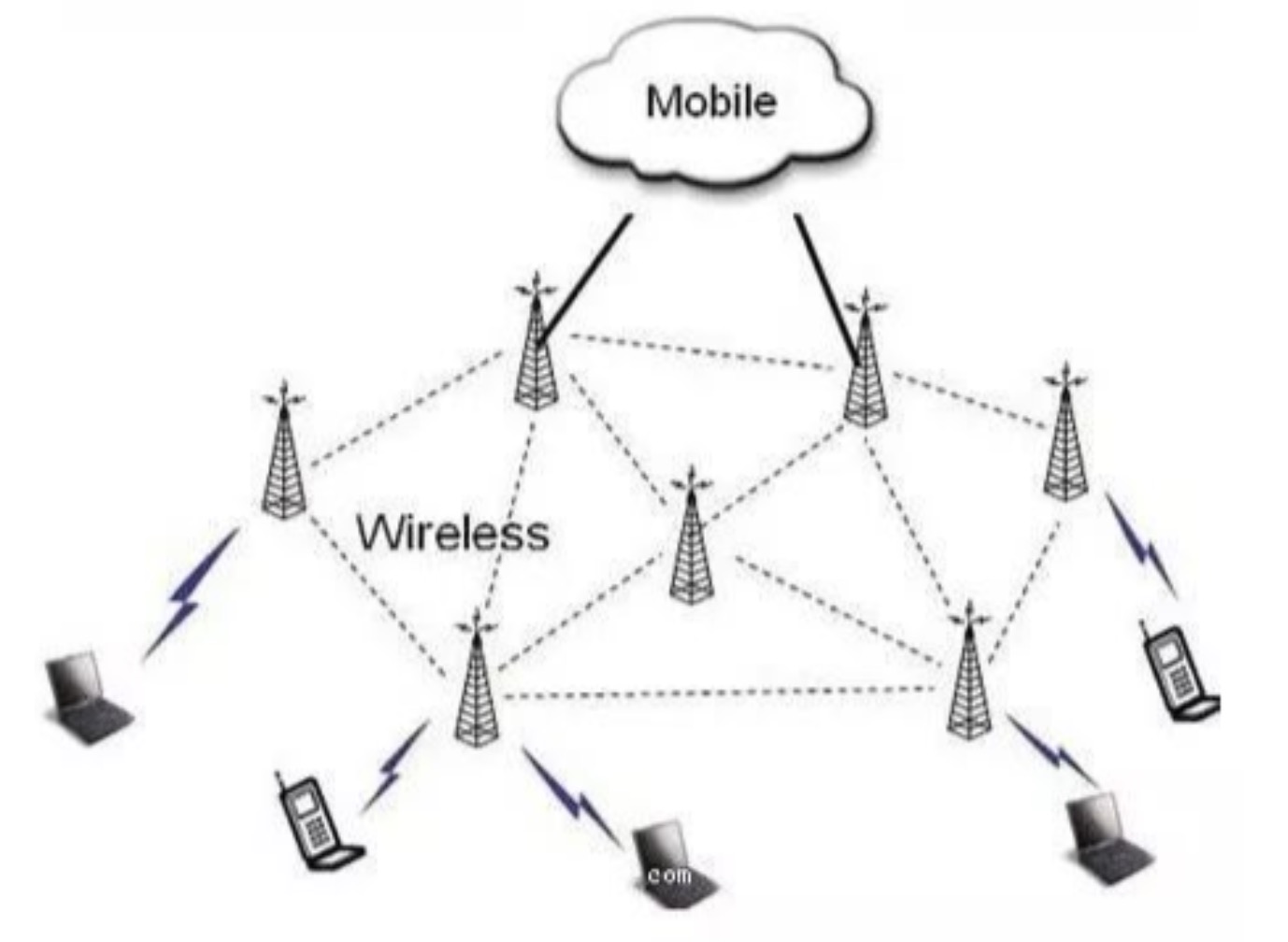

AP-Wireless Access Point (WirelessAccessPoint) AP is the HUB in the traditional wired network, and it is also the most commonly used equipment when building a small wireless LAN.

AP is equivalent to a bridge connecting wired and wireless networks. Its main function is to connect various wireless network clients together, and then connect the wireless network to the Ethernet to achieve the purpose of network wireless coverage.

AP is divided into thin and fat?

Thin AP (FITAP):

Also known as wireless bridges, wireless gateways, and so-called “thin” APs.

Popular understanding of thin AP: It cannot be configured by itself, and a dedicated device (wireless controller) is required for centralized control and management configuration.

“Controller + thin AP + router architecture” is generally used for wireless network coverage, because when there are a large number of APs, only the controller is used to manage the configuration, which will simplify a lot of work.

Fat AP (FATAP):

The so-called fat AP in the industry is also called a wireless router. A wireless router is different from a pure AP. In addition to the wireless access function, it generally has two interfaces, WAN and LAN, supports address translation (NAT), and supports DHCP server, DNS and MAC address cloning, as well as VPN access, firewall and other security Features.

What is AC?

The Wireless AccessPoint Controller is a network device used to centrally control the controllable wireless APs in the local area network. It is the core of a wireless network and is responsible for managing all wireless APs in the wireless network. The management of APs includes: Send configuration, modify related configuration parameters, radio frequency intelligent management, access security control, etc. (All ACs and APs currently circulating in the market are from the same manufacturer to manage each other)

What is a POE switch?

POE (PowerOver Ethernet) POE is also known as a local area network-based power supply system (PoL, Powerover LAN) or Active Ethernet (Active Ethernet), sometimes also referred to as Power Over Ethernet, which refers to the existing Ethernet Cat .5 Without any changes to the wiring infrastructure, while transmitting data signals for some IP-based terminals (such as IP telephones, wireless LAN access points, network cameras, etc.), it can also provide DC for such devices Power supply technology.

POE technology can ensure the normal operation of the existing network while ensuring the safety of the existing structured cabling, minimizing costs.

The POE switch can not only provide the transmission function of the ordinary switch, but also provide the power supply function to the other end of the network cable. The integration of power supply + data transmission does not require an additional power supply module or POE power supply module to supply power to the device, and a Cat.5 cable completes all the work.

PoE power supply difference

Standard poe: According to the IEEE802.3af/at specification, it is necessary to first detect the 25K characteristic resistance of the receiving terminal and perform a handshake. Only when the handshake is successful, can the power supply be supplied; otherwise, only data (data) is passed.

Example: Plug the POE power supply into the computer network card, the computer network card will not be burned, only normal Internet access because the data can pass.

Non-standard POE: also called forced supply type, the AC power is supplied as soon as the power is turned on; the receiving terminal is not detected first, and the handshake is not performed, and the power is directly 48V or 54V.

Example: Plug the POE power supply into the computer network card, you can go online normally, but if you don’t negotiate to directly supply 48 or 54V, it may burn the device.

There are roughly 48V, 24V and 12V output voltages (DC) on the market

The software and hardware needed to deploy wireless engineering?

Basic hardware: router POE switch AC controller wireless AP

High-end hardware: firewall router traffic and behavior management bypass main switch floor switch POE switch AC controller wireless AP

Is the greater the power of the AP, the better?

No, the higher the power of the AP, the higher the transmitted signal strength. Literally speaking, it will lead you to a misunderstanding. The stronger the signal, the better, but the stronger the signal is for itself, which is transmitted in the entire wireless network. Signals belong to both parties. Both the transmitter and the receiver will transmit data to each other. If the signal at the transmitter is too strong, it will inevitably affect the return of data from the receiver, which will cause network transmission delays or packet loss.

Popular understanding: In a space, you and another person are talking at the same time, and the other person’s voice is too loud, and your voice is too small, which will cause the other person to not hear what you are saying, thus affecting the quality of the call.

In a large-scale wireless project, what are the key points and the most important points?

Key points of engineering perspective:

design

The actual construction drawing, determining the routing position of the wiring, need to consider such as: concealment, damage to the building (characteristics of the building structure), avoiding power lines and other lines while using the existing space, and pairing cables in the field Necessary and effective protection needs.

The location of the router

The router is generally selected in an underground weak current room (far away from a strong current room to avoid strong electromagnetic interference). Pay attention to ventilation and keep it dry. It is best to have a cabinet and put it together with the core switch.

POE power supply switch location

The location of the POE switch should be selected reasonably, located in the middle of the AP point, to reduce wiring costs and shorten the distance between the switch and the AP.

AP location selection

The point layout of the AP selects the central area of the scene and radiates it toward the periphery. The coverage areas of AP parts should overlap to reduce signal blind areas. The distance between the AP and the POE switch should not exceed 80 meters (a genuine Anpu network cable as an example)

Network cable laying

As the transmission carrier of the network signal, the network cable should be protected during the laying process, and there should be no breaks or dead angles. If necessary, iron pipes should be worn or placed in the roof bridge. Special attention is paid to the principle of high-voltage wires to reduce interference to the signal.

Precautions for practical debugging and post-maintenance:

a. External network and routing: The external network cable is connected in place to ensure the normal Internet access conditions of the line, and the routing is connected to ensure that the routing itself can normally communicate with the Internet. During the construction, the main exchange and the construction floor exchange are connected to ensure the normal communication of the backbone network.

b. Debug walkie-talkie: During the commissioning stage, a set of walkie-talkie equipment needs to be seconded to the mall to facilitate the debugging work.

c. During the construction and debugging stage, sufficient spare parts shall be reserved for AP, switch, network cable, and other construction and debugging hardware.

d. Construction drawings: Before each construction, please ask the constructor to give us two copies of the construction drawings.

Construction network topology: requirements, detailed floor switches, routing information and location, number of APs on each floor, and connection methods.

Construction equipment connection line identification diagram: requirements, routing and switch and AP connection information, corresponding ports, etc., all connection lines are theoretically approximate network cable length (including road-switch-AP).

e. Construction wiring and line marking planning:

Information identification record: AP point Mac information record: when the construction party places the AP location, it is necessary to record the floor number and location number of the AP and the corresponding Mac information (note the corresponding floor plan AP number, for example: 1st floor No. 1 mac information format is 1F- 1: AC:11:22:33:44:AP ). This information is uniformly recorded in the Word document floor shopping mall construction drawing according to the floor distribution or directly manually recorded in the free space on the side of the construction drawing, which is convenient for later maintenance and use.

Wire mark identification record:

(1) The input and output lines of the switch: It is necessary to indicate which floor and location number of the AP connected to the terminal of the identification or serial number, (note the corresponding floor plan AP number, for example: the format of 1st floor 1 is 1F-1), Lines coming in from the external network should also be marked with a cable: “External network access should be marked.”

(2) Interconnection between switches on all floors: The source of the wiring connector with the identification or serial number should be marked at the head of the line interconnection line of the switch. (Pay attention to write the floor and switch label, such as: switch 1 on the first floor, the format is 1F-1 SW)

Check on the spot whether the installed AP is powered on and working normally:

After the construction is completed, the construction personnel shall check all APs on the spot to be energized normally, and the normal state under the power-on condition: the green indicator on the AP is always on. If the routing is in place and running, the software can be used to detect whether the AP normally emits signals and connects to the Internet.

If the above information is completely clear, there is no need for the construction personnel to be on site. If the above information is completely unclear, the construction personnel need to cooperate on site for each commissioning.

The above is the news sharing from the PASSHOT. I hope it can be inspired you. If you think today’ s content is not too bad, you are welcome to share it with other friends. There are more latest Linux dumps, CCNA 200-301 dumps, CCNP Written dumps and CCIE Written dumps waiting for you.