PSTN (Public Switched Telephone Network) is a switched network used for global voice communications. This network has approximately 800 million users and is the largest telecommunications network in the world today.

In normal life, such as when we use a landline phone to make a call or use a telephone line to dial the Internet at home, we all use this network. One thing that needs to be emphasized here is that the PSTN network existed for the transmission of voice data from the beginning.

PSTN (PublicSwitch Telephone Network) is a telephone network commonly used in our daily lives. As we all know, PSTN is a circuit-switched network based on analog technology. Among many wide area network interconnection technologies, the communication cost required for interconnection through PSTN is the lowest, but its data transmission quality and transmission speed are also the worst, and the PSTN network resource utilization rate is relatively low.

It also refers to POTS. It is a collection of all circuit-switched telephone networks since Alexander Graham Bell invented the telephone. Today, except for the final connection between the user and the local telephone switchboard, the public switched telephone network has been technically fully digitalized.

In relation to the Internet, PSTN provides a considerable part of the long-distance infrastructure of the Internet. In order to use the long-distance infrastructure of the PSTN and share the circuit through information exchange among many users, the ISP needs to pay the equipment owner a fee.

In this way, Internet users only need to pay the Internet service provider. The public switched telephone network is a circuit-switched service based on standard telephone lines, used as a connection method for connecting remote endpoints. Typical applications are the connection between remote endpoints and local LAN and remote users dial-up Internet access.

PSTN can be composed of two parts, one is the switching system; the other is the transmission system, the switching system is composed of telephone switches, and the transmission system is composed of transmission equipment and cables. With the growth of user needs, these two components are constantly developing and improving to meet user needs.

1. The development of the exchange system probably goes through the following stages.

In the era of manual switching, transfers are performed manually. Just like a long time ago, when making a call, an operator will be connected first, and the operator will help you with the transfer.

In the era of automatic switching, step-by-step and crossbar switches were produced.

In the era of semi-electronic switching, electronic technology was introduced into the control part of the switch.

In the era of air division switching, program-controlled switches were created, but analog signals were still transmitted.

In the era of digital switching, with the successful application of PCM pulse code modulation technology, digital program-controlled switches have also been produced, in which digital signals are transmitted.

2. PSTN transmission equipment has evolved from carrier multiplexing equipment to SDH equipment, and cables have also evolved from copper wires to optical fibers.

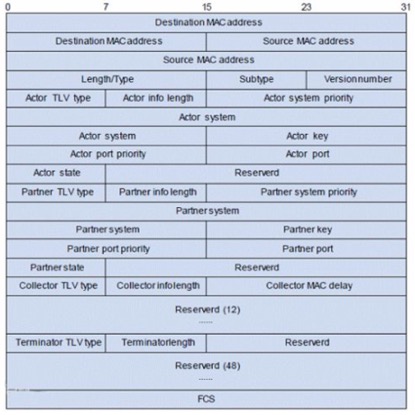

What PSTN provides is an analog dedicated channel, and the channels are connected via several telephone exchanges. When two hosts or routers need to be connected via PSTN, modems must be used to implement signal analog/digital and digital/analog conversion on the network access side at both ends.

From the perspective of the OSI seven-layer model, PSTN can be seen as a simple extension of the physical layer, and does not provide users with services such as flow control and error control. Moreover, because PSTN is a circuit-switched way, a path is established until it is released, and its full bandwidth can only be used by the devices at both ends of the path, even if there is no data to be transmitted between them. Therefore, this circuit switching method cannot achieve full utilization of network bandwidth.

PSTN access to the network is relatively simple and flexible, usually as follows:

1. Access to the network through ordinary dial-up telephone lines. As long as the modem is connected in parallel on the original telephone lines of the two communication parties, and then the modem is connected to the corresponding Internet equipment. Most Internet devices, such as PCs or routers, are provided with several serial ports, and serial interface specifications such as RS-232 are used between the serial port and the Modem. The cost of this connection method is relatively economical, and the charging price is the same as that of ordinary telephones, which can be applied to occasions where communication is not frequent.

2. Access the network through leased telephone lines. Compared with ordinary dial-up telephone lines, leased telephone lines can provide higher communication speed and data transmission quality, but the corresponding costs are also higher than the previous method. The access mode of the dedicated line is not much different from the access mode of the ordinary dial-up line, but the process of dial-up connection is omitted.

3. The way to connect to the public data exchange network (X.25 or Frame-Relay, etc.) from PSTN via ordinary dial-up or leased dedicated telephone line. It is a better remote way to use this method to realize the connection with remote places, because the public data switching network provides users with reliable connection-oriented virtual circuit services, and its reliability and transmission rate are much stronger than PSTN.

The above is the news sharing from the PASSHOT. I hope it can be inspired you. If you think today’ s content is not too bad, you are welcome to share it with other friends. There are more latest Linux dumps, CCNA 200-301 dumps, CCNP Written dumps and CCIE lab dumps waiting for you.